In the era of high-definition video content and AI-driven visual enhancement, EV-DeblurVSR has emerged as a powerful framework designed to simultaneously handle video deblurring and super-resolution. Video content often suffers from motion blur, low resolution, or compression artifacts, particularly when captured in low-light conditions, with fast-moving objects, or using consumer-grade devices. Traditional methods often treated deblurring and super-resolution as separate tasks, leading to suboptimal results and visual inconsistencies. EV-DeblurVSR integrates these two tasks into a unified pipeline, leveraging event-driven neural networks, temporal coherence, and deep learning architectures to produce high-quality, sharp, and detailed video frames. This article explores the architecture, working principles, implementation techniques, use cases, troubleshooting methods, and performance optimization strategies for EV-DeblurVSR, aiming to provide a comprehensive guide for researchers, video engineers, and AI enthusiasts looking to enhance video quality efficiently.

1. Understanding Video Deblurring and Super-Resolution

Video deblurring is the process of removing motion or defocus blur from video frames, while super-resolution focuses on enhancing the resolution and details of low-resolution video frames. Motion blur can occur due to camera shake, fast-moving objects, or low shutter speeds. Low resolution is common in surveillance, smartphone captures, or compressed streaming content. EV-DeblurVSR combines both tasks in a single framework to recover sharp, high-resolution frames, preserving temporal consistency across sequences and maintaining fine details that separate, sequential approaches often fail to achieve. Understanding the underlying principles of both deblurring and super-resolution is critical for leveraging EV-DeblurVSR effectively.

2. The Architecture of EV-DeblurVSR

EV-DeblurVSR typically relies on deep convolutional neural networks (CNNs), recurrent modules, and event-based inputs:

-

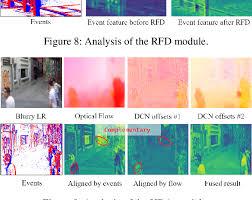

Event-based Processing: Some implementations use event cameras or inferred motion cues to detect dynamic changes between frames, helping guide the deblurring and super-resolution process.

-

Convolutional Layers: Extract spatial features from low-resolution or blurred frames, identifying edges, textures, and structures.

-

Temporal Modules: Recurrent neural networks (RNNs) or 3D convolutional layers capture temporal dependencies, ensuring smooth transitions between consecutive frames.

-

Upsampling Layers: Use transposed convolutions, pixel shuffle, or attention mechanisms to increase spatial resolution while maintaining image fidelity.

This architecture allows EV-DeblurVSR to generate videos that are not only sharp but also temporally coherent, avoiding flickering or unnatural artifacts common in frame-by-frame processing.

3. Event-Based Inputs and Motion Guidance

Event-driven inputs, inspired by event cameras, provide fine-grained motion information that traditional frame-based video processing often misses. These inputs capture changes in pixel intensity over time, allowing the network to focus on regions of motion. Integrating this information improves deblurring accuracy and ensures that super-resolution enhancement is applied precisely where it is needed. This is particularly beneficial in fast-action sequences or low-light conditions, where conventional algorithms struggle to identify motion or preserve details.

4. Training Strategies for EV-DeblurVSR

Training EV-DeblurVSR involves several critical strategies to achieve optimal performance:

-

Paired Datasets: High-resolution sharp videos paired with blurred, low-resolution versions for supervised learning.

-

Loss Functions: Combining pixel-wise losses (MSE, L1), perceptual losses (VGG-based), and temporal consistency losses ensures both visual fidelity and smooth transitions.

-

Data Augmentation: Techniques like random cropping, flipping, rotation, and noise injection help improve robustness.

-

Progressive Training: Starting with low-resolution frames and gradually increasing the resolution helps stabilize training and improves convergence.

Effective training ensures that the model generalizes well to real-world videos with diverse motion patterns and lighting conditions.

5. Implementation Techniques

Implementing EV-DeblurVSR requires attention to both software and hardware:

-

Frameworks: PyTorch or TensorFlow are commonly used for building and training the network.

-

Hardware Requirements: High-performance GPUs with sufficient VRAM are necessary for training on high-resolution videos.

-

Batch Processing: Handling sequences of frames efficiently while maintaining temporal coherence.

-

Checkpointing: Saving model weights periodically to prevent data loss during long training sessions.

Practical implementation ensures reproducibility, scalability, and efficient use of computational resources.

6. Real-World Applications

EV-DeblurVSR has a wide range of applications across industries:

-

Film and Video Production: Enhancing low-quality footage, restoring archival videos, or improving cinematic content.

-

Surveillance: Improving clarity and resolution of security camera footage for better analysis.

-

Sports Broadcasting: Enhancing fast-action sequences captured by high-speed cameras.

-

Autonomous Vehicles: Assisting in video-based perception tasks by providing clearer input frames for object detection and tracking.

-

Medical Imaging: Enhancing video captured during endoscopy or other procedures where motion blur is common.

These applications demonstrate the versatility and impact of EV-DeblurVSR in professional and research contexts.

7. Performance Optimization and Evaluation

Optimizing EV-DeblurVSR performance involves:

-

Inference Optimization: Using mixed-precision training, TensorRT, or ONNX to reduce latency and memory usage.

-

Temporal Consistency Metrics: Evaluating PSNR, SSIM, and temporal coherence ensures smooth and accurate video output.

-

Batch Size and Sequence Length: Balancing between computational efficiency and temporal context.

-

Fine-Tuning: Adapting pre-trained models to specific video types (e.g., sports, low-light, surveillance) improves performance.

Systematic evaluation guarantees that outputs meet desired quality standards.

8. Common Challenges and Solutions

Users may encounter challenges such as:

-

High Computational Costs: Training on full-resolution video sequences can be resource-intensive; solutions include patch-based training or reduced frame rates.

-

Overfitting: Data augmentation and diverse training datasets prevent the model from memorizing specific sequences.

-

Flickering: Using temporal consistency losses and motion-aware modules reduces frame-to-frame inconsistencies.

-

Real-Time Processing: Combining model pruning, quantization, and efficient architectures enables faster inference.

Addressing these challenges ensures the usability of EV-DeblurVSR in production environments.

9. Comparison with Other Methods

Unlike traditional sequential deblurring and super-resolution approaches, EV-DeblurVSR:

-

Processes frames jointly: Maintains temporal consistency.

-

Uses motion guidance: Event-based or optical-flow cues improve accuracy.

-

Reduces artifacts: Integrated processing avoids amplification of noise or blur during separate steps.

-

Provides scalable performance: Can be adapted for various resolutions and frame rates.

These advantages make it a state-of-the-art solution for video enhancement.

10. Future Directions and Research Opportunities

Future research on EV-DeblurVSR could explore:

-

Integration with multi-modal inputs: Combining audio, depth maps, or other sensory data.

-

Lightweight architectures: Efficient models suitable for mobile devices or edge computing.

-

Adaptive frame processing: Dynamically adjusting enhancement intensity based on motion or scene complexity.

-

Self-supervised or unsupervised learning: Reducing dependence on paired datasets for training.

These directions will expand the applicability and efficiency of EV-DeblurVSR for diverse use cases.

Conclusion

EV-DeblurVSR represents a significant advancement in video enhancement technology by unifying deblurring and super-resolution into a single, temporally coherent framework. By leveraging deep learning architectures, event-based motion guidance, and temporal modeling, it delivers sharp, high-resolution video frames even under challenging conditions such as low light, fast motion, or low-quality source material. Implementing, training, and optimizing EV-DeblurVSR requires careful attention to hardware, datasets, loss functions, and temporal coherence, but the resulting improvements in video quality are substantial. Its applications across film production, surveillance, autonomous systems, sports, and medical imaging underscore its versatility. As research continues, EV-DeblurVSR is poised to become an essential tool in both professional and research video processing workflows.

Frequently Asked Questions (FAQ)

Q1: What is EV-DeblurVSR used for?

It is used to simultaneously remove motion blur and enhance the resolution of video frames, producing sharp and detailed videos.

Q2: How does EV-DeblurVSR differ from traditional methods?

Unlike sequential approaches, it jointly handles deblurring and super-resolution, maintaining temporal consistency and reducing artifacts.

Q3: Can EV-DeblurVSR be used in real-time applications?

With optimization techniques such as model pruning, quantization, and efficient GPU usage, it can be adapted for near real-time processing.

Q4: What datasets are used for training EV-DeblurVSR?

High-resolution video datasets paired with artificially blurred, low-resolution versions are commonly used, along with event-based or motion-cued data.

Q5: Is EV-DeblurVSR suitable for mobile devices?

Current implementations are GPU-intensive, but research into lightweight architectures and edge computing could make it feasible for mobile deployment.